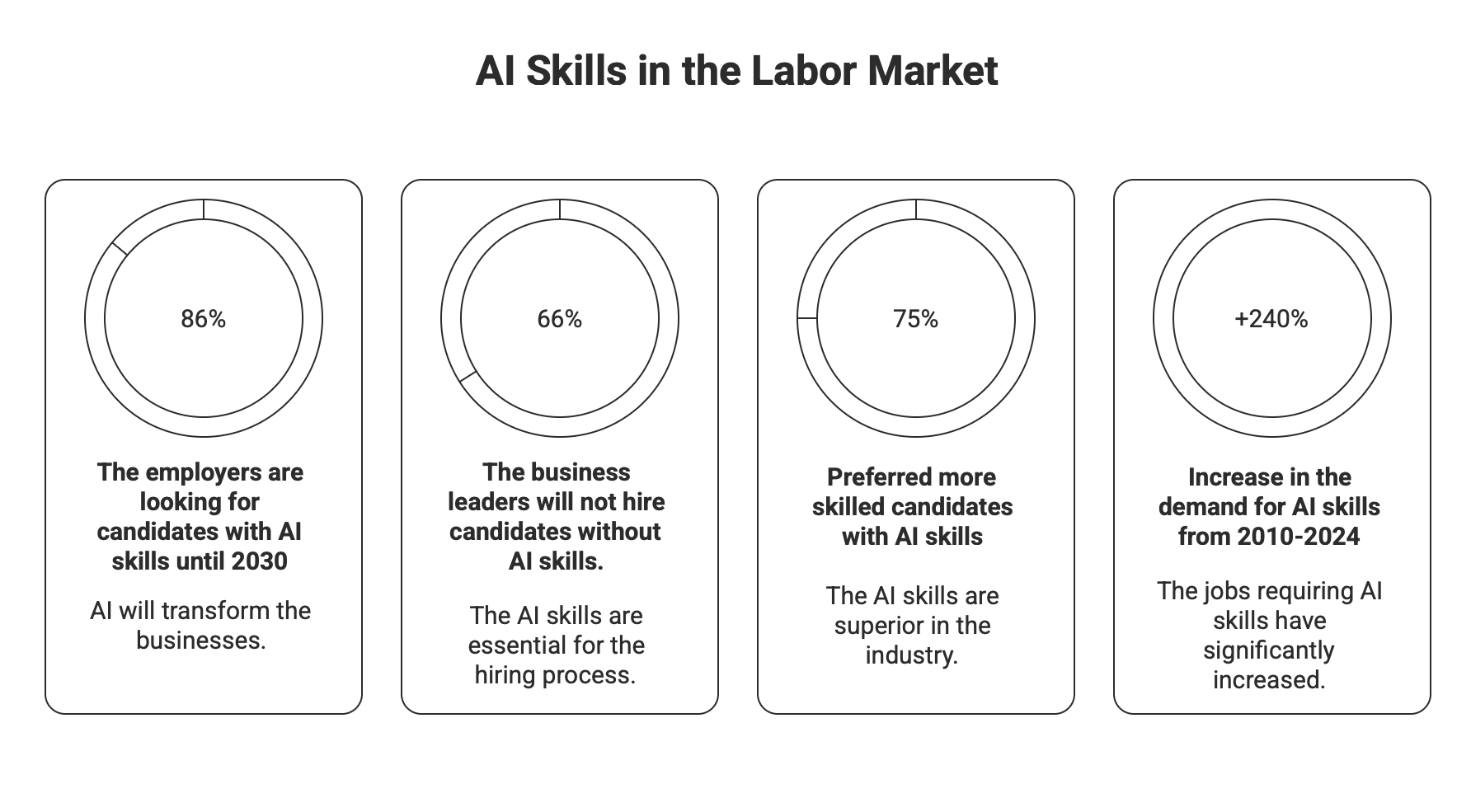

In the labor market, the World Economic Forum's Future of Jobs Report 2025 identifies AI and big data as the fastest-growing skills, with 86% of employers expecting AI to transform their businesses by 2030. Even more striking, two-thirds of business leaders surveyed say they wouldn't hire a candidate without AI skills, and nearly three-quarters would rather hire a less experienced candidate with AI skills than a more experienced candidate without them. Meanwhile, the percentage of all job postings requiring at least one AI skill increased from about 0.5 percent in 2010 to 1.7 percent in 2024, representing nearly 628,000 job postings demanding AI skills.

Students aren't waiting for policy to catch up. The proportion of university students using generative AI tools jumped from 66% in 2024 to 92% in 2025, with usage for assessments skyrocketing from 53% to 88% in just one year. Among U.S. teens ages 13 to 17, roughly two-thirds report using AI chatbots, including about three-in-ten who do so daily. Yet many school strategy conversations still start with the wrong premise: AI as an add-on. As if you can keep your instructional model intact, sprinkle in a few "AI initiatives," buy licenses, run a workshop, and call it transformation. That's not how this works. And the data proves it. According to a 2024 survey, 80% of students agree that their university's integration of AI tools doesn't currently meet their standards. Meanwhile, only 28% of institutions had a formal AI policy as of spring 2025, while another 32% were still developing one.

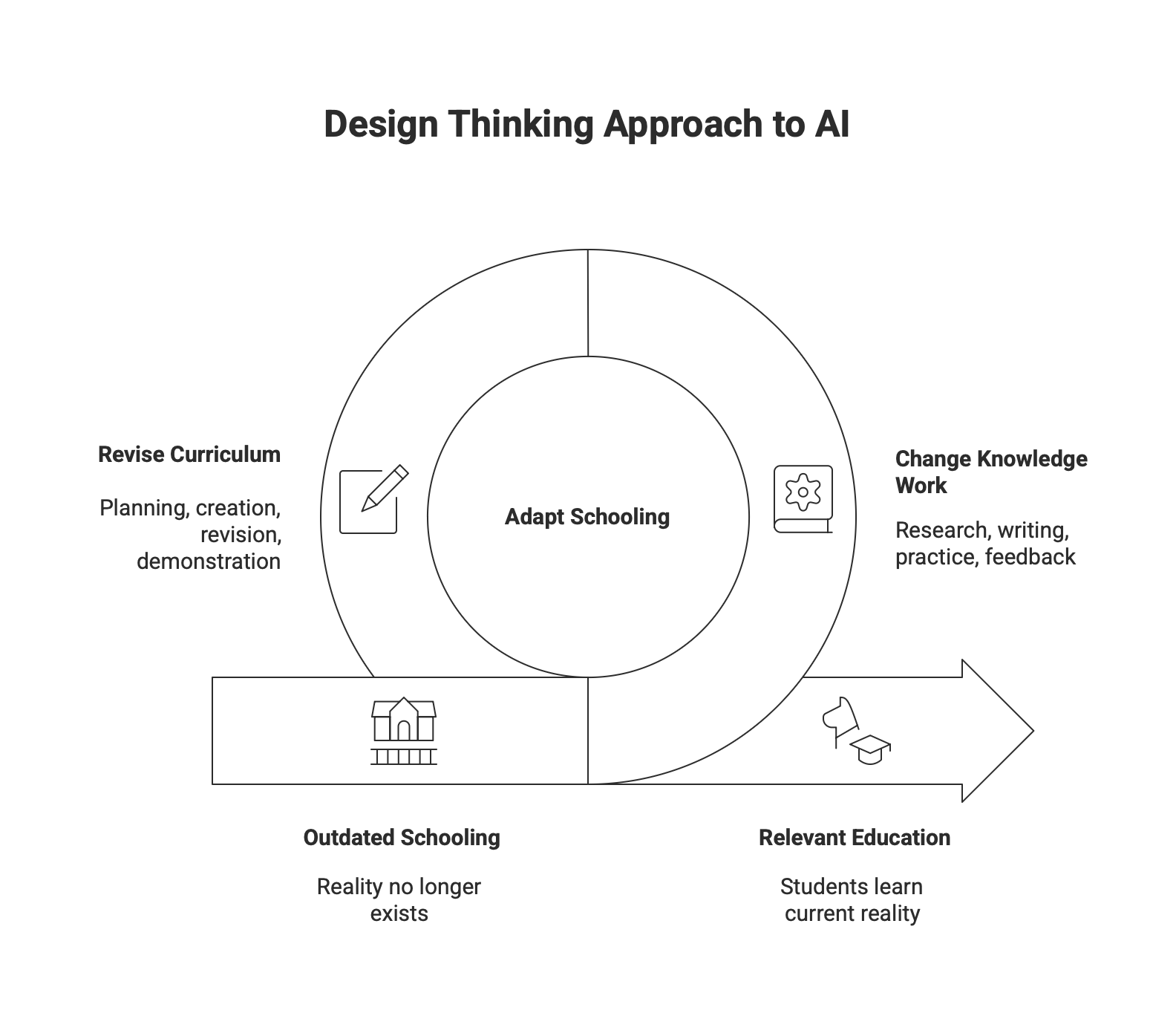

AI doesn't plug into old plans. It changes how knowledge work happens—research, writing, practice, feedback, tutoring, planning, creation, revision, and demonstration of mastery. If the work changes, schooling must change with it, or school becomes the place where students learn a version of reality that no longer exists.

Before you buy tools, redesign courses, or publish a policy, leadership should answer two clarifying questions.

First, how does your institution actually create learning value, mechanically? Not "we teach," but where does skill formation reliably happen in your system? What routines move students from novice to capable? Where does feedback come from, how fast, and how specific is it?

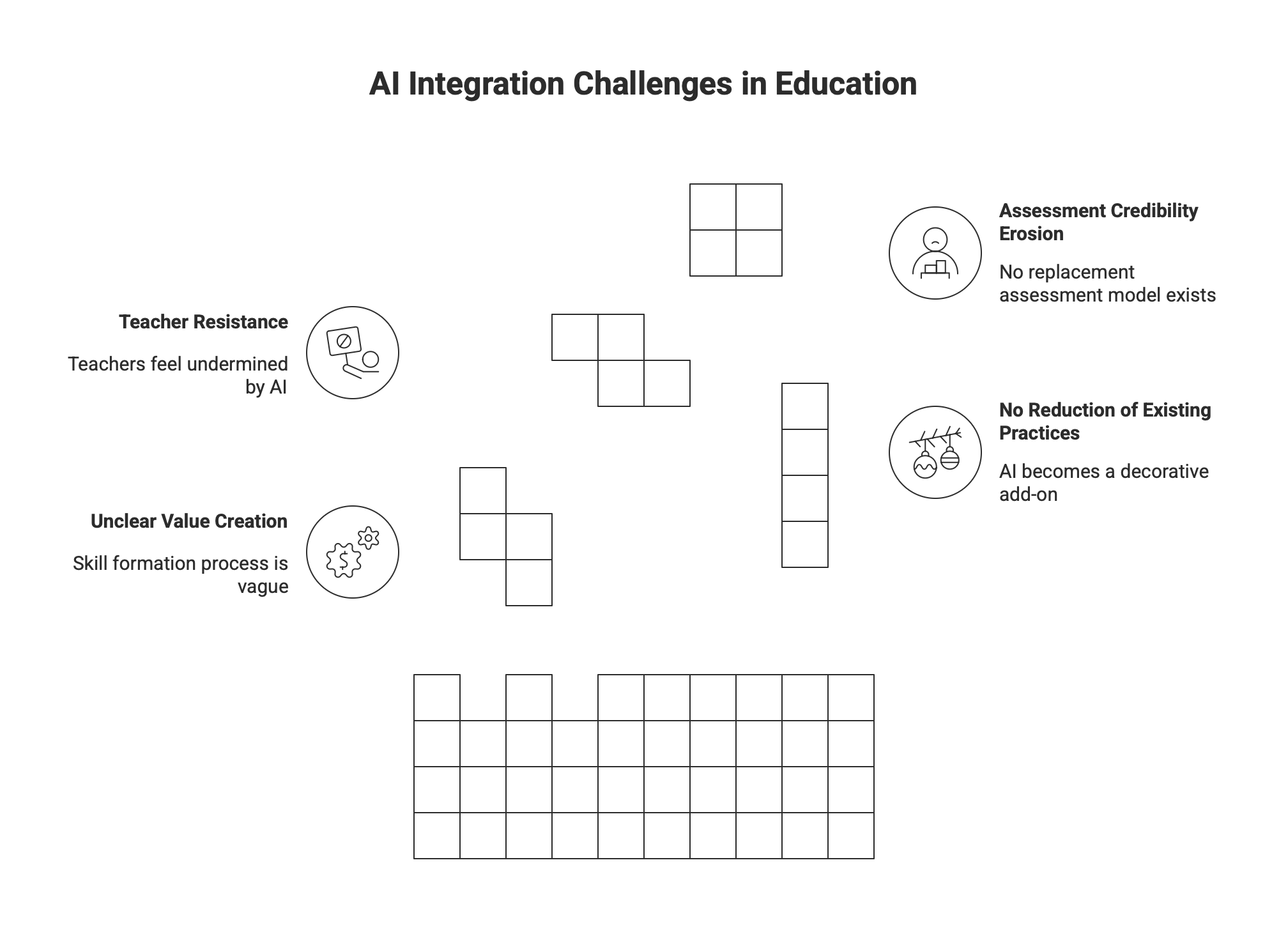

Second, what are you going to stop doing so AI becomes foundational, not decorative? If nothing gets reduced, redesigned, or retired, AI becomes a sidecar. That's how schools end up in the worst middle zone: students use AI anyway, teachers feel undermined, assessment credibility erodes, and no replacement model exists. The tension is real and measured: a 2024 Pew Research Center survey found that a quarter of public K-12 teachers say AI tools do more harm than good in education, with that figure rising to 35% among high school teachers. Another 32% see an equal mix of benefit and harm.

Real AI integration is reallocation: time (what gets taught, practiced, revised), assessment (what counts as evidence), training (what teachers and students must learn to do), and leadership attention (what becomes non-negotiable). If nothing gets cut, nothing meaningful changes.

Schools often describe their practice as a method: "Students do research by using these sources, writing this report, and presenting it." Or "Teachers assess with essays and rubrics." That's the method. The learning goal is deeper: students can form defensible claims and support them with evidence; communicate clearly to a specific audience; and reason, revise, and improve based on feedback. When you separate goal from process, everything opens up. You can redesign tasks to focus on reasoning, judgment, critique, and synthesis. You can decide what AI should do versus what humans must do. You stop "AI-ing up" a task that needed redesign anyway. This works at every scale: institution-wide, department-wide, and down to a single lesson.

Three Institutional Investments to Make AI Real in 2026

A strong AI transformation plan treats investment as more than money. It reallocates leadership time, teacher bandwidth, and strategic focus.

Build a five-year AI learning strategy, not a tool rollout. "We rolled out chatbot accounts" is not a strategy. A real strategy maps three layers. Layer A focuses on personal productivity and quality for leaders, teachers, and students—not just speed, but better planning, better feedback, better student thinking. Layer B addresses workflow redesign, looking at how learning happens daily through drafting, practice loops, coaching, formative checks, revision cycles, and study systems. Layer C tackles core process transformation across high-leverage systems such as assessment credibility, advising and personalization, mastery tracking, and intervention models. This aligns with what employers are signaling: the World Economic Forum reports that workers can expect two-fifths (39%) of their existing skill sets will be transformed or become outdated over the 2025-2030 period, making AI-related capabilities central, not optional.

AI-power the workforce and avoid creating "confident amateurs." One workshop creates the most dangerous outcome: people who know just enough to make bad decisions confidently. The numbers bear this out: while 83% of K-12 teachers use generative AI tools for either personal or school-related activities, only 24% claim strong familiarity with AI. More concerning, approximately 26% of districts planned to offer AI training during the 2024-2025 school year, leaving 74% of teachers without formal preparation. Plan a competence ramp that includes protected time to practice and redesign instruction, shared task patterns through AI-enabled lesson and assessment templates, peer review of prompts, tasks, and student exemplars, and a common definition of "good use" versus "bad use." Expect a tradeoff: a short-term hit in output to build long-term capability.

Rethink systems of record into systems of learning. Keep your LMS and SIS running, but ask whether your systems support hyper-personalized learning and fast feedback loops. Do they support iterative improvement, competency evidence, portfolios, and transparency of process? AI pushes education toward systems that track mastery, reasoning steps, revision quality, and decision-making, not just final answers.

Most AI courses cover topics such as history, ethics, and how models work. Useful, but insufficient. The new baseline is AI as a way of working: generating options and choosing among them, verifying outputs through sources, data, and tests, iterating from weak draft to strong result, disclosing use responsibly, and combining AI with human judgment and domain knowledge.

The urgency is clear in both student behavior and employer expectations. Students are already using AI for schoolwork at unprecedented rates—88% for assessments in 2025, up from 53% in 2024. Meanwhile, PwC's 2025 Global AI Jobs Barometer reveals that workers with AI skills like prompt engineering command a 56% wage premium, up from 25% the previous year. Because students are already using AI, treating this literacy as an elective creates an equity gap: some students learn good practices at home, while others learn accidental practices in secret.

A Course Design Instruction Framework for AI Literacy

Here's a practical blueprint for curriculum leaders to build AI courses that function like a new literacy, transferable across subjects.

Start by defining four to six "North Star" outcomes that transfer across the board. Examples include workflow design (question to output), verification (sources, data, tests), judgment (decisions and rationale), communication (audience and constraints), integrity (authorship and disclosure), and safety and ethics (bias, privacy, misuse mitigation).

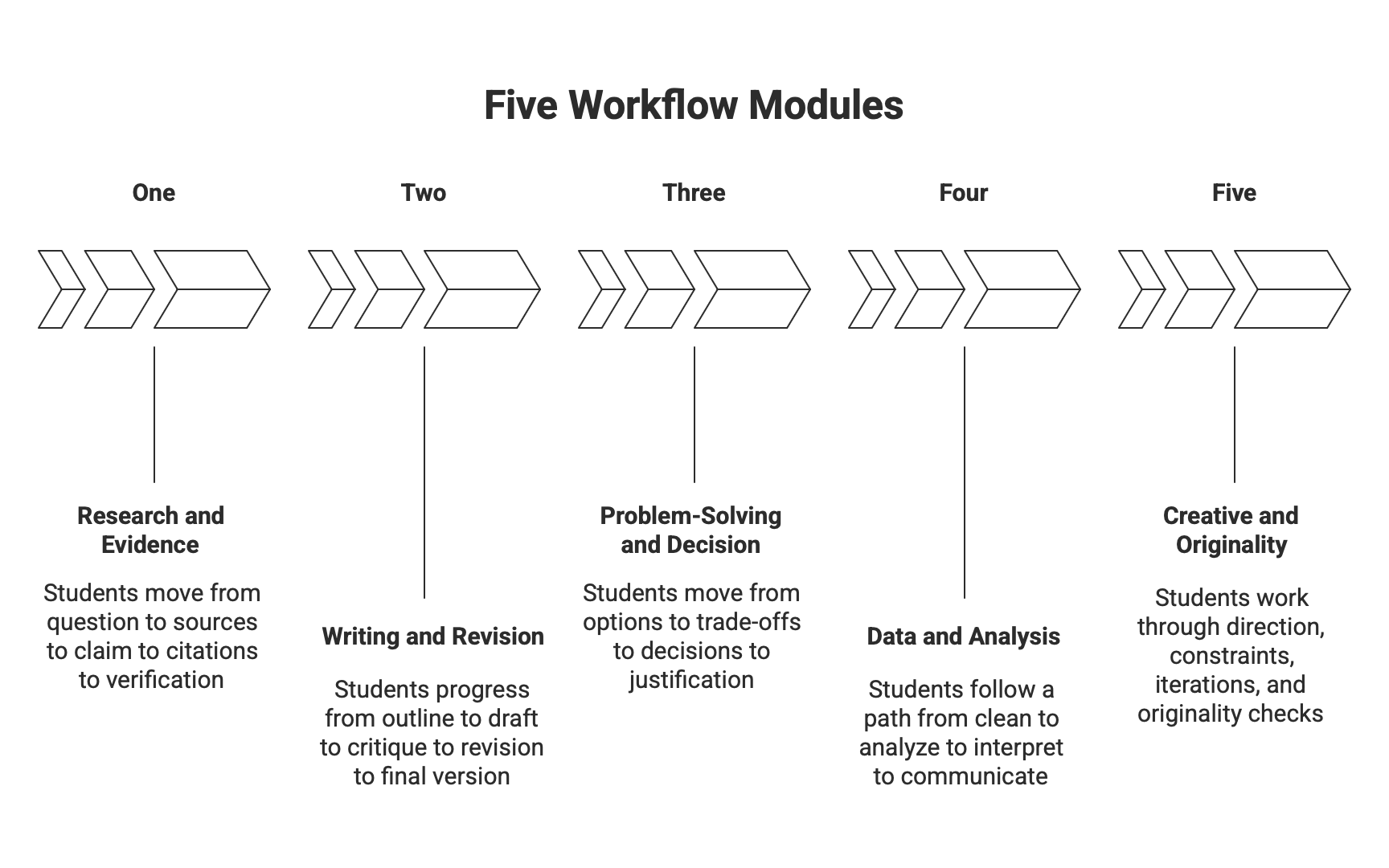

Organize modules around real workflows, not content units. Think research and evidence workflow, writing and revision workflow, problem-solving and decision workflow, data and analysis workflow, and creative direction and originality workflow.

Use a mandatory protocol that students follow every time—a repeatable "AI Work Protocol" that moves from intent to inputs to prompt strategy to output test to verification to revision to disclosure.

Make the assessment process first. Grade the evidence of thinking: prompt iterations plus rationale, verification log, revision history with commentary, and decision memo. Build rubrics with two lines: product quality and human ownership. Students can score highly with simple outputs if their reasoning, verification, and transparency are strong.

Keep the tool stack intentionally small. One primary AI interface, one research and citation workflow, one creation surface, one portfolio, and a log system. Fewer tools mean deeper mastery.

Build teacher enablement into every module with demonstration prompts, misconceptions, exemplars, integrity boundaries, and time estimates. End with a capstone that proves real-life readiness, showing AI-enabled quality, not just speed, and make it auditable via "show your work" artifacts.

How We Make This Real for Students

Course design is only half the solution. The other half is day-to-day guidance—a structure that helps students use AI properly every time they touch it, across every subject, while protecting academic integrity and evaluation fairness.

That's where we deploy a smart support layer inside the student experience: The AI Coursework Literacy and Integrity Assistant. Its purpose is to help students use AI responsibly, transparently, and strategically across all coursework, so AI becomes a thinking amplifier, not a shortcut.

In practice, this solves several problems at once. Students stop guessing what's "allowed" and start following a clear protocol. Teachers stop policing vague suspicion and start evaluating the visible process. Integrity becomes observable and documentable, not just assumed. Students build transferable AI workflows they can reuse across every subject.

The need for such a structure is evident in the data: 31% of students in 2024 said they weren't clear on when they're permitted to use generative AI in the classroom, and over 30% of students face accusations of excessive AI use and plagiarism. Meanwhile, educators' primary concern about AI tools is cheating and plagiarism, which 65% of teachers cite as the main issue they face.

The tool would live within a learning platform, perhaps under a section called Learning Skills, with AI Literacy and Integrity as the focus. A static top-level disclaimer would always be visible: "This tool does not replace learning. It helps you think better, document your process, and use AI transparently. Grades are protected when judgment, verification, and authorship are visible."

Students completing this tool would demonstrate six North Star outcomes. They'd show workflow design by creating repeatable processes from question to output. They'd practice verification by validating AI outputs using sources, data, and tests. They'd develop judgment by making and defending decisions instead of outsourcing them. They'd strengthen communication by adapting outputs to the audience, purpose, and constraints. They'd maintain integrity by disclosing AI use and maintaining authorship standards. And they'd develop safety and ethics awareness by identifying bias, privacy risks, and misuse, and by working to mitigate them.

This comprehensive approach addresses a critical gap: despite their high rates of AI use, 58% of students report not having sufficient AI knowledge and skills, and 48% don't feel adequately prepared for an AI-enabled workplace. At the same time, 59% of teachers expect students to have basic AI skills by the time they reach Grade 6 and university level.

The assistant is built around real-world workflows, not "content units." Every workflow requires the same sequence: intent, evidence, verification, revision, and disclosure.

The Five Workflows Students Actually Need

Each module follows a standard architecture that includes the workflow title, real-life context, two to four guiding questions, an AI Work Protocol checklist (mandatory), evidence upload or entry fields, a reflection prompt, and a save workflow button.

Module One covers the Research and Evidence Workflow. In the context of essays, reports, investigations, and academic claims, students move from question to sources to claim to citations to verification. They're asked to consider what question they're answering, which sources are credible and why, and what evidence supports their claim. Required evidence includes prompt iterations plus rationale, a source list with links, a verification log showing cross-checks and contradictions, and a final claim summary written by the student.

Module Two addresses the Writing and Revision Workflow. For essays, reflections, applications, and written assignments, students progress from outline to draft to critique to revision to final version. Guiding questions prompt them to clarify their position or message, identify what AI assisted with versus what they decided, and reflect on how their thinking evolved. Required evidence includes the outline, an AI-assisted draft, critique notes, a before-and-after revision comparison, and the final version.

Module Three tackles the Problem-Solving and Decision Workflow. In contexts such as STEM problems, case studies, economics, and strategy tasks, students move from options to trade-offs to decisions to justification. Required evidence includes an AI-generated options list, student-written tradeoff analysis, and a decision memo.

Module Four handles the Data and Analysis Workflow. For math, science, economics, experiments, and analytics, students follow a path from clean to analyze to interpret to communicate. Required evidence includes a raw data snapshot, AI-assisted analysis, verification checks (manual or computational), and a student interpretation summary.

Module Five explores the Creative and Originality Workflow. In design, media, storytelling, and presentations, students work through direction, constraints, iterations, and originality checks. Required evidence includes a creative brief written by the student, iteration history, originality reflection, and the final artifact.

Mandatory AI Protocol: Every Assignment, Every Time

Students must complete a checklist for every workflow. They begin with intent, clarifying what they're trying to achieve. Then they identify inputs, distinguishing what they know from what they need. They develop a prompt strategy that considers the role, constraints, examples, and rubric. They conduct an output test to define what "good" would look like. They verify using sources, cross-checks, calculations, and tests. They engage in revision, critique, and improvement rather than blindly accepting. Finally, they complete the disclosure, documenting what AI did, what they did, and what sources they used.

This is how "AI use" becomes teachable, coachable, and assessable.

Assessment Design That Protects Integrity. The assessment model is process-first by default. Required grading artifacts include prompt iterations with rationale, a verification log, a revision history with commentary, a decision memo (if applicable), and a reflection on limitations, bias, and risk. The final output alone is never sufficient.

The rubric structure uses two lines. Line one evaluates technical or product quality: clarity, structure, accuracy, and completeness. Line two assesses human ownership: reasoning, judgment, verification quality, ethical awareness, and transparency. Students can score highly even with "simple outputs" if their judgment and verification are strong.

The Disclosure Builder: Making Transparency Easy. At the end of each workflow, students generate an AI Disclosure Statement documenting the AI tools used, the tasks AI assisted with, the decisions made by the student, and the sources independently verified. This disclosure is exportable and attachable to assignments, making integrity operational rather than performative.

Teacher Enablement: Not Extra Work. In the admin view, each workflow includes demonstration prompts, common misconceptions plus fixes, exemplar student work (strong, average, and risky), integrity boundaries (allowed versus not allowed), and time estimates for class and homework. This turns AI integration into a supported practice, not an individual teacher burden.

Capstone: Real-Life AI Readiness. The capstone requirement asks students to prove AI-enabled quality, not just faster completion. Options might include a policy brief with a verification log, a research synthesis with bias checks, a product proposal with trade-off memos, or a multi-modal project combining written, visual, and oral components. Mandatory evidence includes the full workflow log, verification documentation, decision memos, and disclosure statement.

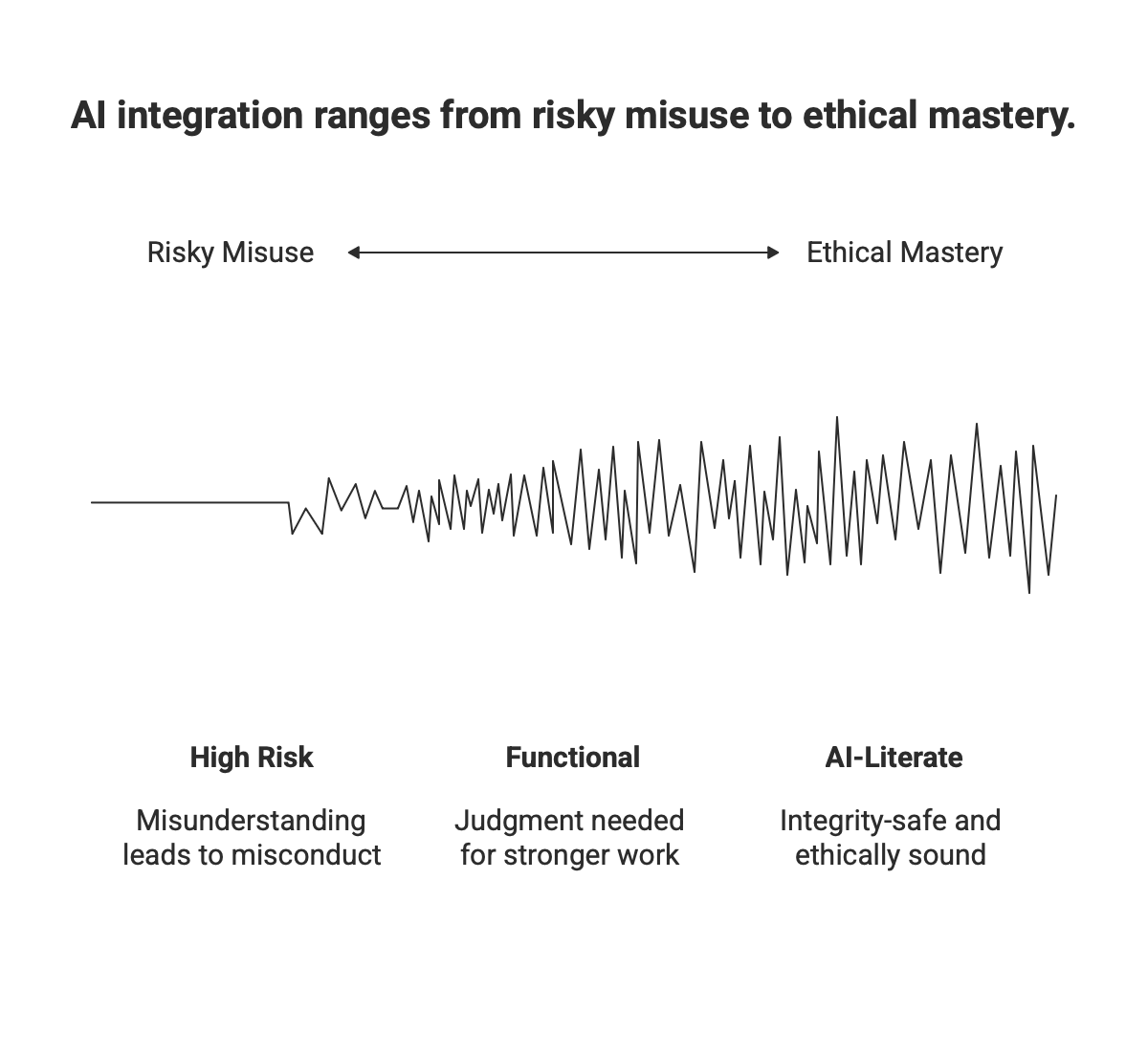

A Simple Self-Evaluation Score That Flags Risk Early. Students complete a self-evaluation scored from 0 to 20 across five dimensions, each scored from 0 to 4: workflow design, verification strength, judgment and decision-making, communication and adaptation, and integrity and disclosure.

Scores from sixteen to twenty indicate AI-literate and integrity-safe work. Scores from ten to fifteen suggest functional work that needs stronger judgment. Scores from 0 to 9 indicate a high risk of misuse or misunderstanding. This creates a coaching loop before mistakes become misconduct.

The Leadership Move That Separates Schools in 2026

Ask one question repeatedly: "What learning experiences can we run now with AI that we couldn't run before?"

Incremental gains are real. But transformation comes from redesign: personalized coaching loops, rapid feedback, higher complexity projects with scaffolding, and assessment models that reward reasoning over performance theater.

The evidence for urgency is mounting. LinkedIn data shows that professionals are adding AI literacy skills, such as prompt engineering, at nearly 5 times the rate of other professional skills. The World Economic Forum's report indicates that technology-related trends will create 170 million jobs while displacing 92 million by 2030, resulting in a net increase of 78 million jobs. Among the fastest-growing roles are Big Data Specialists, AI and Machine Learning Specialists, and Fintech Engineers. At the same time, the unemployment rate for college graduates has risen to 5.8 percent in 2025, up from 4.6 percent the previous year, suggesting that traditional education without AI literacy may be losing its edge.

And the fastest way to make it work, without chaos, is to pair redesigned courses with smart workflow tools like the AI Coursework Literacy and Integrity Assistant, which makes thinking visible and integrity measurable.

AI doesn't replace thinking. It exposes it. When your process is clear, your integrity is protected.

%402x.svg)

%401x.svg)